Process Module

- class sudio.process.audio_wrap.AFXChannelFillMode(value, names=None, *, module=None, qualname=None, type=None, start=1, boundary=None)

Bases:

EnumEnumeration of different modes for handling length discrepancies when applying effects to audio channels.

- NONE = 1

- PAD = 2

- TRUNCATE = 3

- REFERENCE_REFINED = 4

- REFERENCE_RAW = 5

- class sudio.process.audio_wrap.AudioWrap(master, record)

Bases:

objectA class that handles audio data processing with caching capabilities.

Initialize the AudioWrap object.

- Parameters:

master (object) – The master instance.

record (AudioMetadata or str) – An instance of AudioMetadata, a string representing audio metadata, or an AudioWrap object itself.

Slicing

The wrapped object can be sliced using standard Python slice syntax x[start: stop: speed_ratio], where x is the wrapped object.

Time Domain Slicing

Use [i: j: k, i(2): j(2): k(2), i(n): j(n): k(n)] syntax, where: - i is the start time, - j is the stop time, - k is the speed_ratio, which adjusts the playback speed.

This selects nXm seconds with index times: i, i+1, …, j, i(2), i(2)+1, …, j(2), …, i(n), …, j(n) where m = j - i (j > i).

Notes: - For i < j, i is the stop time and j is the start time, meaning audio data is read inversely.

Speed Adjustment

speed_ratio > 1 increases playback speed (reduces duration).

speed_ratio < 1 decreases playback speed (increases duration).

Default speed_ratio is 1.0 (original speed).

Speed adjustments preserve pitch. (support this project bro)

Frequency Domain Slicing (Filtering)

Use [‘i’: ‘j’: ‘filtering options’, ‘i(2)’: ‘j(2)’: ‘options(2)’, …, ‘i(n)’: ‘j(n)’: ‘options(n)’] syntax, where: - i is the starting frequency, - j is the stopping frequency (string type, in the same units as fs).

This activates n IIR filters with specified frequencies and options.

Slice Syntax for Filtering

x=None, y=’j’: Low-pass filter with a cutoff frequency of j.

x=’i’, y=None: High-pass filter with a cutoff frequency of i.

x=’i’, y=’j’: Band-pass filter with critical frequencies i, j.

x=’i’, y=’j’, options=’scale=[negative value]’: Band-stop filter with critical frequencies i, j.

Filtering Options

- ftypestr, optional

Type of IIR filter to design. Options: ‘butter’ (default), ‘cheby1’, ‘cheby2’, ‘ellip’, ‘bessel’.

- rsfloat, optional

Minimum attenuation in the stop band (dB) for Chebyshev and elliptic filters.

- rpfloat, optional

Maximum ripple in the passband (dB) for Chebyshev and elliptic filters.

- orderint, optional

The order of the filter. Default is 5.

- scalefloat or int, optional

Attenuation or amplification factor. Must be negative for a band-stop filter.

Complex Slicing

Use [a: b, ‘i’: ‘j’: ‘filtering options’, …, ‘i(n)’: ‘j(n)’: ‘options(n)’, …, a(n): b(n), …] or [a: b, [Filter block 1]], a(2): b(2), [Filter block 2] …, a(n): b(n), [Filter block n]].

i, j are starting and stopping frequencies.

a, b are starting and stopping times in seconds.

This activates n filter blocks described in the filtering section, each operating within a specific time range.

Notes

Wrapped objects use static memory to reduce dynamic memory usage for large audio data.

Examples

>>> import sudio >>> master = Master() >>> audio = master.add('audio.mp3') >>> master.echo(audio[5: 10, 36:90] * -10)

In this example, after creating an instance of the Master class, an audio file in MP3 format is loaded. Then, the AudioWrap object is sliced from 5 to 10 and from 36 to 90, and these two sliced segments are joined. The result is then gained with -10 dB.

>>> filtered_audio = audio[5: 10, '200': '1000': 'order=6, scale=-.8'] >>> master.echo(filtered_audio)

Here we apply a 6th-order band-stop filter to the audio segment from 5 to 10 seconds, targeting frequencies between 200 Hz and 1000 Hz. The filter, with a -0.8 dB attenuation, effectively suppresses this range. Finally, the filtered audio is played through the standard output using the master.echo method.

>>> filtered_audio = audio[: 10, :'400': 'scale=1.1', 5: 15: 1.25, '100': '5000': 'order=10, scale=.8'] >>> master.echo(filtered_audio)

First, the audio is sliced into 10-second segments and enhanced using a low-pass filter (LPF) with a 400 Hz cutoff and a 1.1 scaling factor, boosting lower frequencies in the initial segment. Next, a 5 to 15-second slice is processed at 1.25x playback speed, adjusting the tempo. A 10th-order band-pass filter is then applied to this segment, isolating frequencies between 100 Hz and 5000 Hz with a 0.8 scale factor. Finally, the two processed segments are combined, and the fully refined audio is played through the standard output using master.echo.

Simple two-band EQ:

>>> filtered_audio = audio[40:60, : '200': 'order=4, scale=.8', '200'::'order=5, scale=.5'] * 1.7 >>> master.echo(audio)

Here, a two-band EQ tweaks specific frequencies within a 40-60 second audio slice. First, a 4th-order low-pass filter reduces everything below 200 Hz, scaled by 0.8 to lower low frequencies. Next, a 5th-order high-pass filter handles frequencies above 200 Hz, scaled by 0.5 to soften the highs. After filtering, the overall volume is boosted by 1.7 times to balance loudness. Finally, the processed audio is played using master.echo(), revealing how these adjustments shape the sound—perfect for reducing noise or enhancing specific frequency ranges.

- name

Descriptor class for accessing and modifying the ‘name’ attribute of an object.

This class is intended to be used as a descriptor for the ‘name’ attribute of an object. It allows getting and setting the ‘name’ attribute through the __get__ and __set__ methods.

- join(*other)

Join multiple audio segments together.

- Parameters:

other – Other audio data to be joined.

- Returns:

The current AudioWrap object after joining with other audio data.

- set_data(data)

Set the audio data when the object is in unpacked mode.

- Parameters:

data – Audio data to be set.

- Returns:

None

- unpack(reset=False, astype=sudio.io.SampleFormat.UNKNOWN, start=None, stop=None, truncate=True)

Unpacks audio data from cached files to dynamic memory.

- Parameters:

reset – Resets the audio pointer to time 0 (Equivalent to slice ‘[:]’).

astype (

SampleFormat) – Target sample format for conversion and normalizationstart (

float) – start time. (assert start < stop) (also accept negative values)stop (

float) – stop time. (assert start < stop) (also accept negative values)truncate (

bool) – Whether to truncate the file after writing

- Return type:

ndarray- Returns:

Audio data in ndarray format with shape (number of audio channels, block size).

Example

>>> import sudio >>> import numpy as np >>> from sudio.io import SampleFormat >>> >>> ms = sudio.Master() >>> song = ms.add('file.ogg') >>> >>> fade_length = int(song.get_sample_rate() * 5) >>> fade_in = np.linspace(0, 1, fade_length) >>> >>> with song.unpack(astype=SampleFormat.FLOAT32, start=2.4, stop=20.8) as data: >>> data[:, :fade_length] *= fade_in >>> song.set_data(data) >>> >>> master.echo(wrap)

This example shows how to use the sudio library to apply a 5-second fade-in effect to an audio file. We load the audio file (file.ogg), calculate the number of samples for 5 seconds, and use NumPy’s linspace to create a smooth volume increase.

We then unpack the audio data between 2.4 and 20.8 seconds in FLOAT32 format, normalizing it to avoid clipping. The fade-in is applied by multiplying the initial samples by the fade array. Finally, the modified audio is repacked and played back with an echo effect. This demonstrates how sudio handles fades and precise audio format adjustments.

- invert()

Invert the audio signal by flipping its polarity.

Multiplies the audio data by -1.0, effectively reversing the signal’s waveform around the zero axis. This changes the phase of the audio while maintaining its original magnitude.

- Returns:

A new AudioWrap instance with inverted audio data

Examples:

>>> wrap = AudioWrap('audio.wav') >>> inverted_wrap = wrap.invert() # Flip the audio signal's polarity

- validate_and_convert(t)

- get_sample_format()

Get the sample format of the audio data.

- Return type:

SampleFormat- Returns:

The sample format enumeration.

- get_sample_width()

Get the sample width (in bytes) of the audio data.

- Return type:

int- Returns:

The sample width.

- get_master()

Get the parent object (Master) associated with this AudioWrap object.

- Returns:

The parent Master object.

- get_size()

Get the size of the audio data file.

- Return type:

int- Returns:

The size of the audio data file in bytes.

- get_sample_rate()

Get the frame rate of the audio data.

- Return type:

int- Returns:

The frame rate of the audio data.

- get_nchannels()

Get the number of channels in the audio data.

- Return type:

int- Returns:

The number of channels.

- get_duration()

Get the duration of the audio data in seconds.

- Return type:

float- Returns:

The duration of the audio data.

- get_data()

Get the audio data either from cached files or dynamic memory.

- Return type:

Union[AudioMetadata,ndarray]- Returns:

If packed, returns record information. If unpacked, returns the audio data.

- is_packed()

- Return type:

bool- Returns:

True if the object is in packed mode, False otherwise.

- get(offset=None, whence=None)

Context manager for getting a file handle and managing data.

- Parameters:

offset – Offset to seek within the file.

whence – Reference point for the seek operation.

- Returns:

File handle for reading or writing.

- time2byte(t)

Convert time in seconds to byte offset in audio data.

- Parameters:

t (

float) – Time in seconds- Return type:

int- Returns:

Byte index corresponding to the specified time

- byte2time(byte)

Convert byte offset to time in seconds.

- Parameters:

byte (

int) – Byte index in audio data- Return type:

float- Returns:

Time in seconds corresponding to the byte index

- afx(cls, *args, start=None, stop=None, duration=None, input_gain_db=0.0, output_gain_db=0.0, wet_mix=None, channel=None, channel_fill_mode=AFXChannelFillMode.REFERENCE_REFINED, channel_fill_value=0.0, transition=None, **kwargs)

Apply an audio effect to the audio data with advanced channel and gain controls.

- Parameters:

cls (type[FX]) – Effect class to apply (must be a subclass of FX)

start (float, optional) – Start time for effect application (optional)

stop (float, optional) – Stop time for effect application (optional)

input_gain_db (float, optional) – Input gain in decibels, defaults to 0.0

output_gain_db (float, optional) – Output gain in decibels, defaults to 0.0

wet_mix (float, optional) –

Effect mix ratio (0.0 to 1.0), optional

Blends original and processed signals

Note: Not supported for all effects

channel (int, optional) –

Specific channel to apply effect to in multi-channel audio

Only applicable for multi-channel audio (>1 channel)

Raises TypeError if used in mono mode

channel_fill_mode (AFXChannelFillMode, optional) –

Strategy for handling length mismatches between original and processed audio

AFXChannelFillMode.PAD: Pad shorter audio with specified fill value

AFXChannelFillMode.TRUNCATE: Truncate to shortest audio length

AFXChannelFillMode.REFERENCE_RAW:

If refined audio is shorter, pad with fill value

If refined audio is longer, truncate to original audio length

AFXChannelFillMode.REFERENCE_REFINED:

If refined audio is shorter, truncate original audio to refined length

If refined audio is longer, pad original audio with fill value

channel_fill_value (float, optional) – Value used for padding when channel_fill_mode is PAD

- Returns:

New AudioWrap instance with applied effect

- Return type:

- Raises:

TypeError –

If effect class is not supported

If channel parameter is invalid

If attempting to use channel in mono mode

AttributeError – If audio data is not packed

RuntimeError – If channel dimensions are inconsistent

- class sudio.process.fx.tempo.Tempo(*args, **kwargs)

Bases:

FXInitialize the Tempo audio effect processor for time stretching.

Configures time stretching with support for both streaming and offline audio processing, optimized for 32-bit floating-point precision.

Notes:

Implements advanced time stretching using WSOLA (Waveform Similarity Overlap-Add) algorithm to modify audio tempo without altering pitch.

- process(data, tempo=1.0, envelope=[], **kwargs)

Perform time stretching on the input audio data without altering pitch.

This method allows tempo modification through uniform or dynamic tempo changes, utilizing an advanced Waveform Similarity Overlap-Add (WSOLA) algorithm to manipulate audio duration while preserving sound quality and spectral characteristics.

Parameters:

- datanp.ndarray

Input audio data as a NumPy array. Supports mono and multi-channel audio. Recommended data type is float32.

- tempofloat, optional

Tempo scaling factor for time stretching. - 1.0 means no change in tempo/duration - < 1.0 slows down audio (increases duration) - > 1.0 speeds up audio (decreases duration) Default is 1.0.

Examples: - 0.5: doubles audio duration - 2.0: halves audio duration

- envelopenp.ndarray, optional

Dynamic tempo envelope for time-varying tempo modifications. Allows non-uniform tempo changes across the audio signal. Default is an empty list (uniform tempo modification).

Example: - A varying array of tempo ratios can create complex time-stretching effects

- **kwargsdict

Additional keyword arguments passed to the underlying tempo algorithm. Allows fine-tuning of advanced parameters such as: - sequence_ms: Sequence length for time-stretching window - seekwindow_ms: Search window for finding similar waveforms - overlap_ms: Crossfade overlap between segments - enable_spline: Enable spline interpolation for envelope - spline_sigma: Gaussian smoothing parameter for envelope

Returns:

: np.ndarray

Time-stretched audio data with the same number of channels and original data type as the input.

Examples:

>>> slow_audio = tempo_processor.process(audio_data, tempo=0.5) # Slow down audio >>> fast_audio = tempo_processor.process(audio_data, tempo=1.5) # Speed up audio >>> dynamic_tempo = tempo_processor.process(audio_data, envelope=[0.5, 1.0, 2.0]) # Dynamic tempo

Notes:

Preserves audio quality with minimal artifacts

Uses advanced WSOLA algorithm for smooth time stretching

Supports both uniform and dynamic tempo modifications

Computationally efficient implementation

Does not change the pitch of the audio

Warnings:

Extreme tempo modifications (very low or high values) may introduce

audible artifacts or sound distortions - Performance and quality may vary depending on audio complexity

- class sudio.process.fx.gain.Gain(*args, **kwargs)

Bases:

FXInitialize the base Effects (FX) processor with audio configuration and processing features.

This method sets up the fundamental parameters and capabilities for audio signal processing, providing a flexible foundation for various audio effects and transformations.

Parameters:

- data_sizeint, optional

Total size of the audio data in samples. Helps in memory allocation and processing planning.

- sample_rateint, optional

Number of audio samples processed per second. Critical for time-based effects and analysis.

- nchannelsint, optional

Number of audio channels (mono, stereo, etc.). Determines multi-channel processing strategies.

- sample_formatSampleFormat, optional

Represents the audio data’s numeric representation and precision. Defaults to UNKNOWN if not specified.

- data_npersegint, optional

Number of samples per segment, useful for segmented audio processing techniques.

- sample_typestr, optional

Additional type information about the audio samples.

- sample_widthint, optional

Bit depth or bytes per sample, influencing audio resolution and dynamic range.

- streaming_featurebool, default True

Indicates if the effect supports real-time, streaming audio processing.

- offline_featurebool, default True

Determines if the effect can process entire audio files or large datasets.

- preferred_datatypeSampleFormat, optional

Suggested sample format for optimal processing. Defaults to UNKNOWN.

Notes:

This base class provides a standardized interface for audio effect processors, enabling consistent configuration and feature detection across different effects.

- process(data, gain_db=0.0, channel=None, **kwargs)

Apply dynamic gain adjustment to audio signals with soft clipping.

Modify audio amplitude using decibel-based gain control, featuring built-in soft clipping to prevent harsh distortion and maintain signal integrity.

- Return type:

ndarray

Parameters:

- datanumpy.ndarray

Input audio data to be gain-processed. Supports single and multi-channel inputs.

- gain_dbfloat or int, optional

Gain adjustment in decibels: - 0.0 (default): No volume change - Negative values: Reduce volume - Positive values: Increase volume

Additional keyword arguments are ignored in this implementation.

Returns:

: numpy.ndarray

Gain-adjusted audio data with preserved dynamic range and minimal distortions

Examples:

>>> from sudio.process.fx import Gain >>> su = sudio.Master() >>> rec = su.add('file.mp3') >>> rec.afx(Gain, gain_db=-30, start=2.7, stop=7)

- class sudio.process.fx.fx.FX(*args, data_size=None, sample_rate=None, nchannels=None, sample_format=sudio.io.SampleFormat.UNKNOWN, data_nperseg=None, sample_type='', sample_width=None, streaming_feature=True, offline_feature=True, preferred_datatype=sudio.io.SampleFormat.UNKNOWN, **kwargs)

Bases:

objectInitialize the base Effects (FX) processor with audio configuration and processing features.

This method sets up the fundamental parameters and capabilities for audio signal processing, providing a flexible foundation for various audio effects and transformations.

Parameters:

- data_sizeint, optional

Total size of the audio data in samples. Helps in memory allocation and processing planning.

- sample_rateint, optional

Number of audio samples processed per second. Critical for time-based effects and analysis.

- nchannelsint, optional

Number of audio channels (mono, stereo, etc.). Determines multi-channel processing strategies.

- sample_formatSampleFormat, optional

Represents the audio data’s numeric representation and precision. Defaults to UNKNOWN if not specified.

- data_npersegint, optional

Number of samples per segment, useful for segmented audio processing techniques.

- sample_typestr, optional

Additional type information about the audio samples.

- sample_widthint, optional

Bit depth or bytes per sample, influencing audio resolution and dynamic range.

- streaming_featurebool, default True

Indicates if the effect supports real-time, streaming audio processing.

- offline_featurebool, default True

Determines if the effect can process entire audio files or large datasets.

- preferred_datatypeSampleFormat, optional

Suggested sample format for optimal processing. Defaults to UNKNOWN.

Notes:

This base class provides a standardized interface for audio effect processors, enabling consistent configuration and feature detection across different effects.

- is_streaming_supported()

Determine if audio streaming is supported for this effect.

- Return type:

bool

- is_offline_supported()

Check if file/batch audio processing is supported.

- Return type:

bool

- get_preferred_datatype()

Retrieve the recommended sample format for optimal processing.

- Return type:

SampleFormat

- get_data_size()

Get the total size of audio data in samples.

- Return type:

int

- get_sample_rate()

Retrieve the audio sampling rate.

- Return type:

int

- get_nchannels()

Get the number of audio channels.

- Return type:

int

- get_sample_format()

Retrieve the audio sample format.

- Return type:

SampleFormat

- get_sample_type()

Get additional sample type information.

- Return type:

str

- get_sample_width()

Retrieve the bit depth or bytes per sample.

- process(**kwargs)

Base method for audio signal processing.

This method should be implemented by specific effect classes to define their unique audio transformation logic.

- class sudio.process.fx.channel_mixer.ChannelMixer(*args, **kwargs)

Bases:

FXinitialize the ChannelMixer audio effect processor.

Parameters:

- *argsVariable positional arguments

Arguments to be passed to the parent FX class initializer.

- **kwargsdict, optional

Additional keyword arguments for configuration.

- process(data, correlation=None, **kwargs)

apply channel mixing to the input audio signal based on a correlation matrix.

manipulates multi-channel audio by applying inter-channel correlation transformations while preserving signal characteristics.

- Return type:

ndarray

Parameters:

- datanumpy.ndarray

Input multi-channel audio data. Must have at least 2 dimensions. Shape expected to be (num_channels, num_samples).

- correlationUnion[List[List[float]], numpy.ndarray], optional

Correlation matrix defining inter-channel relationships. - If None, returns input data unchanged - Must be a square matrix matching number of input channels - Values must be between -1 and 1 - Matrix shape: (num_channels, num_channels)

- **kwargsdict, optional

Additional processing parameters (currently unused).

Returns:

: numpy.ndarray

Channel-mixed audio data with the same shape as input.

Raises:

- ValueError

If input data has fewer than 2 channels

If correlation matrix is incorrectly shaped

If correlation matrix contains values outside [-1, 1]

Examples:

>>> from sudio.process.fx import ChannelMixer >>> su = sudio.Master() >>> rec = su.add('file.mp3') >>> newrec = rec.afx(ChannelMixer, correlation=[[.4,-.6], [0,1]]) #for two channel

- class sudio.process.fx.pitch_shifter.PitchShifter(*args, **kwargs)

Bases:

FXInitialize the PitchShifter audio effect processor.

This method configures the PitchShifter effect with specific processing features, setting up support for both streaming and offline audio processing.

- process(data, semitones=0.0, cent=0.0, ratio=1.0, envelope=[], **kwargs)

Perform pitch shifting on the input audio data.

This method allows pitch modification through multiple parametrization approaches: 1. Semitone and cent-based pitch shifting 2. Direct ratio-based pitch shifting 3. Envelope-based dynamic pitch shifting

Parameters:

- datanp.ndarray

Input audio data as a NumPy array. Supports mono and multi-channel audio. Recommended data type is float32.

- semitonesnp.float32, optional

Number of semitones to shift the pitch. Positive values increase pitch, negative values decrease pitch. Default is 0.0 (no change).

Example: - 12.0 shifts up one octave - -12.0 shifts down one octave

- centnp.float32, optional

Fine-tuning pitch adjustment in cents (1/100th of a semitone). Allows precise micro-tuning between semitones. Default is 0.0.

Example: - 50.0 shifts up half a semitone - -25.0 shifts down a quarter semitone

- rationp.float32, optional

Direct pitch ratio modifier. - 1.0 means no change - > 1.0 increases pitch - < 1.0 decreases pitch Default is 1.0.

Note: When semitones or cents are used, this ratio is multiplicative.

- envelopenp.ndarray, optional

Dynamic pitch envelope for time-varying pitch shifting. If provided, allows non-uniform pitch modifications across the audio. Default is an empty list (uniform pitch shifting).

Example: - A varying array of ratios can create complex pitch modulations

- **kwargsdict

Additional keyword arguments passed to the underlying pitch shifting algorithm. Allows fine-tuning of advanced parameters like: - sample_rate: Audio sample rate - frame_length: Processing frame size - converter_type: Resampling algorithm

Returns:

: np.ndarray

Pitch-shifted audio data with the same number of channels as input.

Examples:

>>> record = record.afx(PitchShifter, start=30, envelope=[1, 3, 1, 1]) # Dynamic pitch shift >>> record = record.afx(PitchShifter, semitones=4) # Shift up 4 semitones

Notes:

Uses high-quality time-domain pitch shifting algorithm

Preserves audio quality with minimal artifacts

Supports both uniform and dynamic pitch modifications

- class sudio.process.fx.fade_envelope.FadePreset(value, names=None, *, module=None, qualname=None, type=None, start=1, boundary=None)

Bases:

Enum

- class sudio.process.fx.fade_envelope.FadeEnvelope(*args, **kwargs)

Bases:

FXInitialize the base Effects (FX) processor with audio configuration and processing features.

This method sets up the fundamental parameters and capabilities for audio signal processing, providing a flexible foundation for various audio effects and transformations.

Parameters:

- data_sizeint, optional

Total size of the audio data in samples. Helps in memory allocation and processing planning.

- sample_rateint, optional

Number of audio samples processed per second. Critical for time-based effects and analysis.

- nchannelsint, optional

Number of audio channels (mono, stereo, etc.). Determines multi-channel processing strategies.

- sample_formatSampleFormat, optional

Represents the audio data’s numeric representation and precision. Defaults to UNKNOWN if not specified.

- data_npersegint, optional

Number of samples per segment, useful for segmented audio processing techniques.

- sample_typestr, optional

Additional type information about the audio samples.

- sample_widthint, optional

Bit depth or bytes per sample, influencing audio resolution and dynamic range.

- streaming_featurebool, default True

Indicates if the effect supports real-time, streaming audio processing.

- offline_featurebool, default True

Determines if the effect can process entire audio files or large datasets.

- preferred_datatypeSampleFormat, optional

Suggested sample format for optimal processing. Defaults to UNKNOWN.

Notes:

This base class provides a standardized interface for audio effect processors, enabling consistent configuration and feature detection across different effects.

- process(data, preset=sudio.process.fx._fade_envelope.FadePreset.SMOOTH_ENDS, **kwargs)

Shape your audio’s dynamics with customizable envelope effects!

This method allows you to apply various envelope shapes to your audio signal, transforming its amplitude characteristics with precision and creativity. Whether you want to smooth out transitions, create pulsing effects, or craft unique fade patterns, this method has you covered.

- Return type:

ndarray

Parameters:

- datanumpy.ndarray

Your input audio data. Can be a single channel or multi-channel array. The envelope will be applied across the last dimension of the array.

- presetFadePreset or numpy.ndarray, optional

Define how you want to shape your audio’s amplitude:

If you choose a FadePreset (default: SMOOTH_ENDS):

Select from predefined envelope shapes like smooth fades, bell curves, pulse effects, tremors, and more. Each preset offers a unique way to sculpt your sound.

If you provide a custom numpy array:

Create your own bespoke envelope by passing in a custom amplitude array. This gives you ultimate flexibility in sound design.

Additional keyword arguments (optional):

Customize envelope generation with these powerful parameters:

Envelope Generation Parameters: - enable_spline : bool Smoothen your envelope with spline interpolation. Great for creating more organic, natural-feeling transitions.

spline_sigma : float, default varies

Control the smoothness of spline interpolation. Lower values create sharper transitions, higher values create more gradual blends.

fade_max_db : float, default 0.0

Set the maximum amplitude in decibels. Useful for controlling peak loudness.

fade_max_min_db : float, default -60.0

Define the minimum amplitude in decibels. Helps create subtle or dramatic fades.

fade_attack : float, optional

Specify the proportion of the audio dedicated to the attack phase. Influences how quickly the sound reaches its peak volume.

fade_release : float, optional

Set the proportion of the audio dedicated to the release phase. Controls how the sound tapers off.

buffer_size : int, default 400

Adjust the internal buffer size for envelope generation.

sawtooth_freq : float, default 37.7

For presets involving sawtooth wave modulation, control the frequency of the underlying oscillation.

Returns:

: numpy.ndarray

Your processed audio data with the envelope applied. Maintains the same shape and type as the input data.

Examples:

>>> from sudio.process.fx import FadeEnvelope, FadePreset >>> su = sudio.Master() >>> rec = su.add('file.mp3') >>> rec.afx(FadeEnvelope, preset=FadePreset.PULSE, start=0, stop=10)

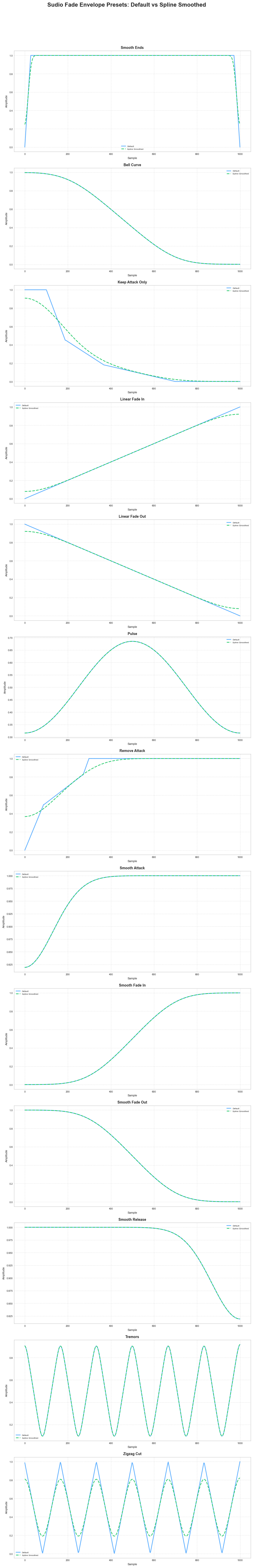

The Fade Envelope module offers a rich set of predefined envelopes to shape audio dynamics. Each preset provides a unique way to modify the amplitude characteristics of an audio signal.

Preset Catalog

Available Presets

Smooth Ends

Bell Curve

Keep Attack Only

Linear Fade In

Linear Fade Out

Pulse

Remove Attack

Smooth Attack

Smooth Fade In

Smooth Fade Out

Smooth Release

Tremors

Zigzag Cut

Usage Example

from sudio.process.fx import FadeEnvelope, FadePreset

# Apply a smooth fade in to an audio signal

fx = FadeEnvelope()

processed_audio = fx.process(

audio_data,

preset=FadePreset.SMOOTH_FADE_IN

)

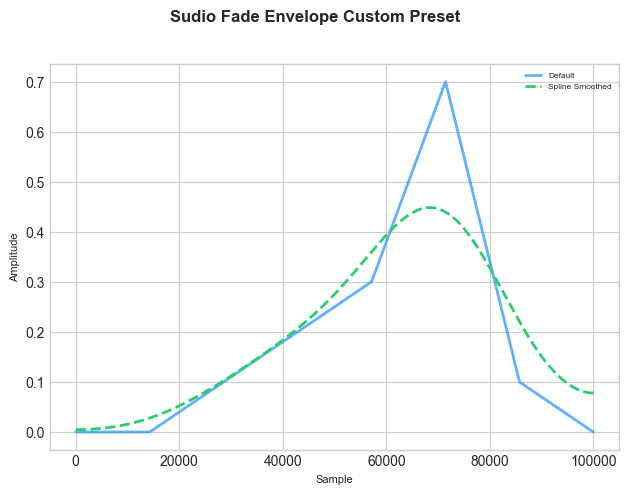

Making Custom Presets

Custom presets in Sudio let you shape your audio’s dynamics in unique ways. Pass a numpy array to the FadeEnvelope effect, and Sudio’s processing transforms it into a smooth, musically coherent envelope using interpolation, and gaussian filter. You can create precise sound manipulations, control the wet/dry mix, and adjust the output gain. in this mode sawtooth_freq, fade_release, and fade_attack parameters are unavailable.

from sudio.process.fx import FadeEnvelope

s = song[10:30]

custom_preset = np.array([0.0, 0.0, 0.1, 0.2, 0.3, 0.7, 0.1, 0.0])

s.afx(

FadeEnvelope,

preset=custom_preset,

enable_spline=True,

start=10.5,

stop=25,

output_gain_db=-5,

wet_mix=.9

)

su.echo(s)